Knowledge Base

Model Context Protocol (MCP) Overview

Model Context Protocol (MCP) is an open, standardized protocol designed to enable AI models to securely and consistently connect to external systems, tools, and data sources at runtime. MCP defines a common interface that allows large language models (LLMs) and AI agents to retrieve context, invoke actions, and interact with enterprise systems without requiring custom, hard‑coded integrations for each use case.

At its core, MCP separates AI reasoning from system connectivity. The AI model focuses on understanding intent and decision‑making, while MCP governs how the model safely accesses data, executes tools, and respects enterprise controls. This separation is critical for scaling GenAI across complex IT environments.

Why MCP Was Created?

MCP was created to solve several systemic problems that limited enterprise adoption of Generative AI. First, organizations needed a way to give AI access to real‑time, authoritative data without embedding sensitive information directly into prompts. Second, enterprises required consistent governance, and observability across AI interactions with business systems. Third, AI builders needed a reusable, scalable way to connect models to tools without rebuilding integrations for every new model or workflow.

From QCG’s perspective, MCP exists to bridge the long‑standing divide between experimentation and execution. It allows GenAI to move beyond proofs of concept and into repeatable, auditable, and secure operational workflows.

How MCP Was Created?

MCP was created in response to the growing fragmentation in how AI models connected to tools and data. Early Generative AI implementations relied heavily on prompt engineering, custom plugins, or tightly coupled function calls that were brittle, difficult to govern, and expensive to maintain.

As AI agents began to emerge that were capable of taking actions rather than simply generating text, the lack of a universal integration standard became a major constraint.

The protocol was introduced as an open standard to address this gap, drawing inspiration from successful technology abstractions such as device drivers, API gateways, and service meshes.

Its design reflects lessons learned from enterprise integration patterns, security frameworks, and automation platforms, making it particularly relevant for production‑grade AI deployments.

The Importance of MCP

MCP is important because it enables AI to operate as a first‑class participant in enterprise workflows. Instead of acting as an isolated assistant, an MCP‑enabled model can retrieve live system data, trigger automations, update records, and collaborate with existing integration and automation platforms. This is a critical shift for organizations investing in automation, integration, and GenAI convergence.

- For enterprise leaders, MCP reduces integration sprawl, lowers security risk, and accelerates time to value.

- For IT teams, it provides a standardized way to manage AI access alongside APIs, RPA bots, and iPaaS workflows.

- For the business, MCP unlocks AI‑driven processes that are adaptive, context‑aware, and continuously improving.

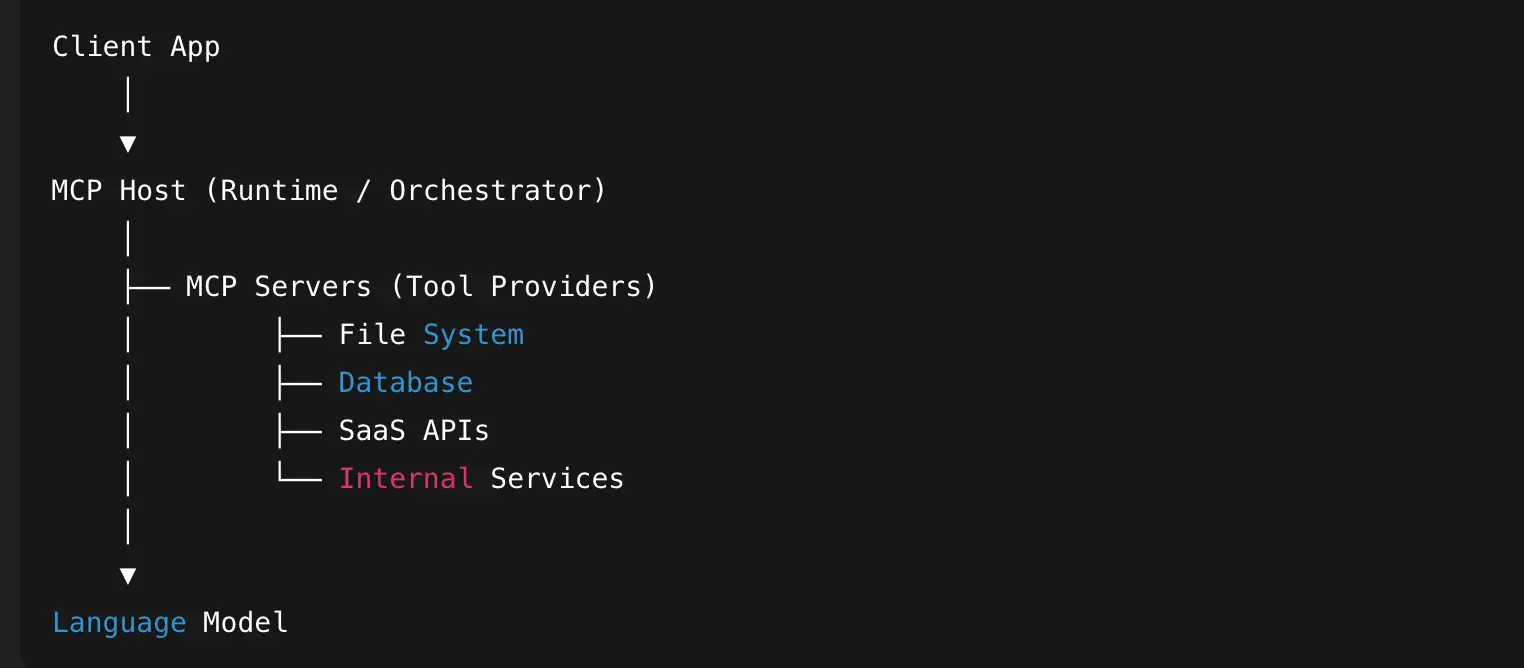

MCP Architecture and Core Components

MCP is best understood as a layered architecture that cleanly separates AI reasoning, enterprise context, and system execution. This separation is what allows MCP to scale safely across complex enterprise environments while supporting multiple models, tools, and workflows.

High-Level MCP Architecture Overview

- At a high level, MCP sits between AI models and enterprise systems.

- The AI model does not connect directly to databases, APIs, or applications. Instead, it interacts through MCP-defined interfaces that control what context can be accessed and what actions can be taken.

- Model Context Protocol (MCP) is a standardized architecture for enabling AI models to securely access external tools, data sources, and systems through a consistent interface.

- It separates:

- The model

- The context layer

- The tools/resources

- The client application

- It separates:

- This enables modular, secure, and scalable AI integrations.

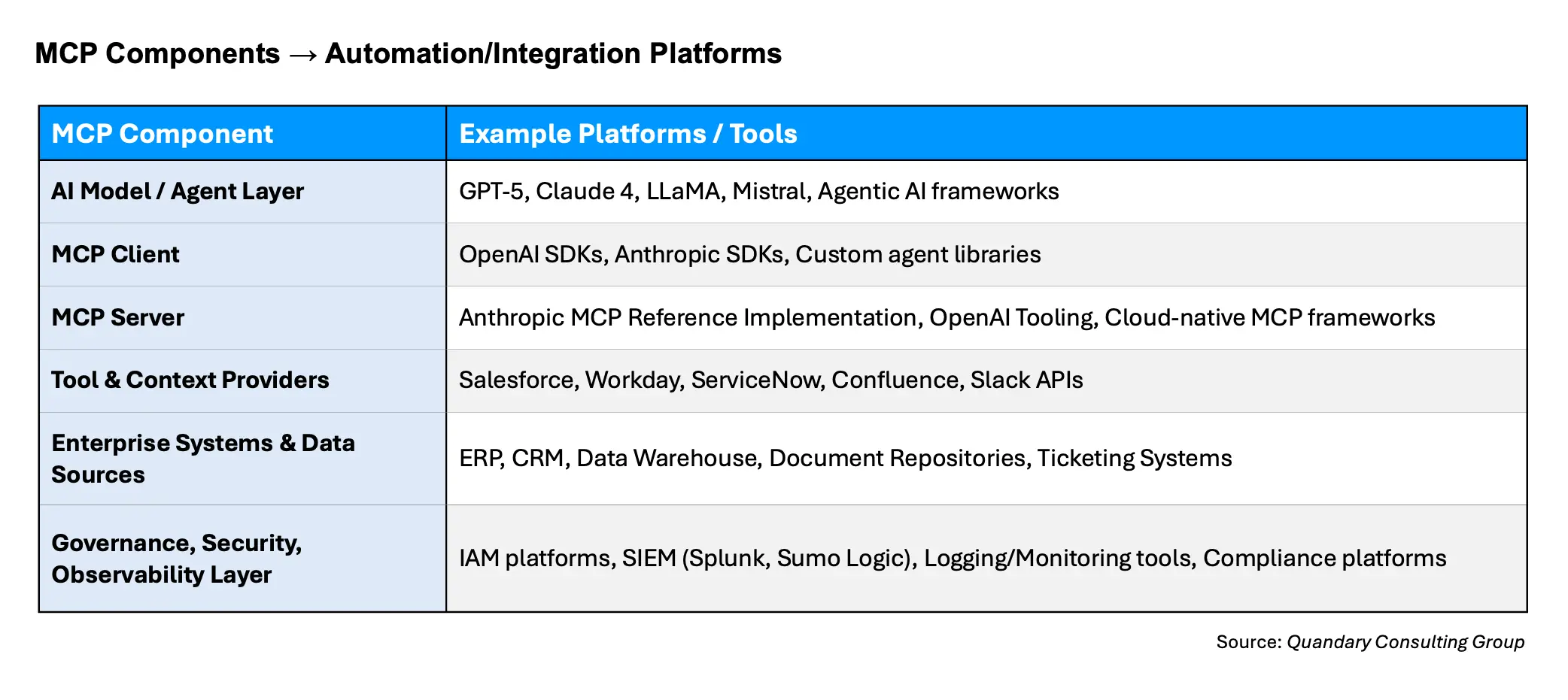

Core MCP Components

- AI Model or Agent Layer – Responsible for reasoning, planning, and decision-making.

- MCP Client – Translates model intents into MCP-compliant requests and handles structured responses.

- MCP Server – Core execution and control hub; enforces policies, validates requests, and manages communication with downstream systems.

- Tool and Context Providers – MCP-compliant connectors exposing specific capabilities with defined inputs, outputs, and permissions.

- Enterprise Systems and Data Sources – CRMs, ERPs, data warehouses, document repositories, ticketing systems, and other operational platforms.

- Governance, Security, and Observability Layer – Provides authentication, authorization, audit logging, monitoring, rate limiting, and integration with enterprise security tools.

Benefits & Risks of MCP

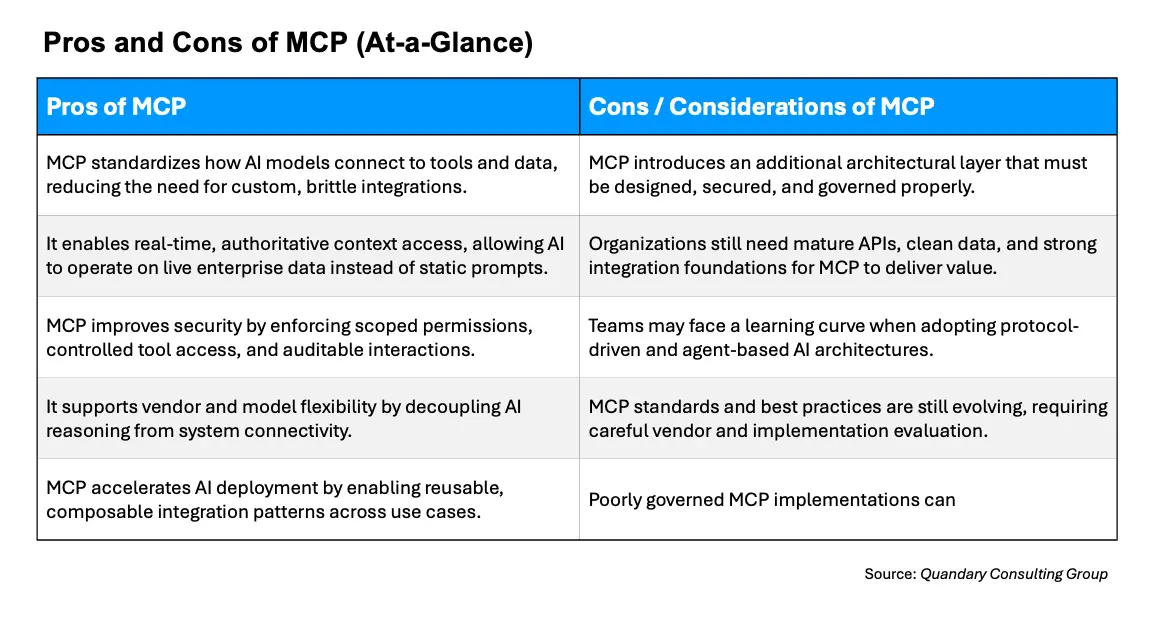

Benefits of MCP

- MCP provides standardization across AI integrations, significantly reducing custom development effort.

- It improves security by enforcing scoped access, permissions, and auditability.

- MCP increases flexibility by allowing multiple models to use the same tool interfaces, protecting organizations from vendor lock‑in.

- It also accelerates deployment by enabling reusable integration patterns across use cases.

- From an architectural standpoint, MCP supports composability, making it easier to evolve AI capabilities as models, tools, and business needs change.

Risks of MCP

- While powerful, MCP introduces additional architectural components that must be designed and governed properly.

- Organizations still need strong integration foundations; MCP does not replace data quality, API maturity, or automation discipline.

- There can also be a learning curve for teams unfamiliar with agent‑based architectures or protocol‑driven design.

- Additionally, MCP adoption is still maturing, and best practices are evolving.

- Enterprises must evaluate vendor implementations carefully to ensure alignment with security, compliance, and scalability requirements.

Top MCP Implementations and Platforms

From QCG's enterprise perspective, the following MCP‑aligned platforms and implementations are among the most relevant today:

- Anthropic MCP Reference Implementation – A foundational, open implementation that defines the core protocol and serves as a baseline for vendors and builders.

- OpenAI MCP‑Compatible Tooling – Widely adopted in GenAI ecosystems, enabling structured tool access and agent workflows at scale.

- Cloud Provider MCP Frameworks – Emerging implementations within major cloud ecosystems that integrate MCP concepts with native security and identity controls.

- iPaaS‑Integrated MCP Solutions – Platforms that embed MCP concepts directly into automation and integration workflows, enabling AI‑driven orchestration.

- Custom Enterprise MCP Gateways – Purpose‑built implementations designed for highly regulated or complex environments where control and observability are paramount.

What Happens When an AI Agent Uses MCP?

When an AI agent uses Model Context Protocol (MCP), something subtle but powerful happens: the agent stops being a standalone language model and starts behaving like a connected system. Instead of relying only on its training data, it can dynamically discover tools, access live data, and execute structured actions in real time. The result is a shift from “generate text” to “orchestrate outcomes.”

But what actually happens under the hood?

From the moment a user sends a prompt to the final structured response, an MCP-enabled agent follows a precise runtime flow. It interprets intent, negotiates capabilities with available servers, selects the appropriate tools, executes calls, and integrates results back into its reasoning loop. Each step is coordinated through a standardized protocol designed to make tool use reliable, secure, and extensible.

Understanding this step-by-step runtime flow is key to understanding why MCP matters. It’s not just about plugging in APIs — it’s about creating a predictable handshake between models and external systems. In this article, we’ll walk through exactly what happens when an agent uses MCP, tracing the journey from user request to completed action and revealing how structured tool orchestration transforms AI from reactive text generator to active digital collaborator.

- User or System Request – A business user or automated process initiates a request that requires AI reasoning and action.

- AI Model Processes Request – The AI model or agent determines the necessary steps and formulates a plan.

- MCP Client Request – The AI model communicates via the MCP client, issuing structured requests to the MCP server.

- MCP Server Validation – The server validates the request, enforces access policies, checks permissions, and identifies relevant tool/context providers.

- Tool or Data Access – MCP invokes the appropriate connectors to retrieve data or execute actions against enterprise systems.

- Response Handling – Results from the enterprise systems are returned through the MCP server and client back to the AI model.

- Decision or Action Completion – The AI model interprets results, may perform additional steps, and completes the intended workflow.

- Logging and Monitoring – All interactions are logged, monitored, and audited through the governance and observability layer for compliance and performance tracking.

Common MCP Architecture Mistakes to Avoid

- Bypassing Security and Governance Layers – Directly connecting AI models to systems without MCP controls can create compliance and risk exposure.

- Tightly Coupling Models to Tools – Hard-coding integrations reduces flexibility, increases maintenance burden, and hinders scaling.

- Ignoring Observability – Failing to log and monitor interactions limits auditability, troubleshooting, and performance optimization.

- Underestimating Data Quality Needs – MCP enables access but does not solve poor data hygiene; garbage in results in garbage out.

- Neglecting Rate Limits and API Constraints – Overloading enterprise systems can occur without proper throttling and validation.

- Overcomplicating Architecture – Adding unnecessary layers or connectors can introduce latency and operational complexity.

MCP Client & Server Ecosystem

Since its introduction in late 2024, MCP has experienced explosive growth. Some MCP marketplaces claim nearly 16,000+ unique servers at the time of writing, but the real number (including those that aren’t made public) could be considerably higher.

Examples of MCP Clients:

- Claude Desktop: The original, first-party desktop application with comprehensive MCP client support

- Claude Code: Command-line interface for agentic coding, complete with MCP capabilities

- Cursor: The premier AI-enhanced IDE with one-lick MCP server installation

- Windsurf: Previously known as Codeium, an IDE with MCP support through the Cascade client

- Continue: Open-source AI coding companion for JetBrains and VS Code

- Visual Studio Code: Microsoft’s IDE, which added MCP support in June 2025

- JetBrains IDEs: Full coding suite that added AI Assistant MCP integration in August 2025

- Xcode: Apple’s IDE, which received MCP support through GitHub Copilot in August 2025

- Eclipse: Open-source IDE with MCP support through GitHub Copilot as of August 2025

- Zed: Performance-focused code editor with MCP prompts as slash commands

- Sourcegraph Cody: AI coding assistant implementing MCP through OpenCtx

- LangChain: Framework with MCP adapters for agent development

- Firebase Genkit: Google’s AI development framework with MCP support

- Superinterface: Platform for adding in-app AI assistants with MCP functionality

Notably, IDEs like Cursor and Windsurf have turned MCP server setup into a one-click affair. This dramatically lowers the barrier for developer adoption, especially among those already using AI-enabled tools. However, consumer-facing applications like Claude Desktop still require manual configuration with JSON files, highlighting an increasingly apparent gap between developer tooling and consumer use cases.

MCP Servers

The MCP ecosystem comprises a diverse range of servers including reference servers (created by the protocol maintainers as implementation examples), official integrations (maintained by companies for their platforms), and community servers (developed by independent contributors).

Reference servers demonstrate core MCP functionality and serve as examples for developers building their own implementations. These servers, maintained by MCP project contributors, include fundamental integrations like:

Git

- This server offers tools to read, search, and manipulate Git repositories via LLMs.

- While relatively simple in its capabilities, the Git MCP reference server provides an excellent model for building your own implementation.

Filesystem

- Node.js server that leverages MCP for filesystem operations: reading/writing, creating/deleting directories, and searching.

- The server offers dynamic directory access via Roots, a recent MCP feature that outlines the boundaries of server operation within the filesystem.

Fetch

- This MCP server provides web content fetching capabilities. This server converts HTML to markdown for easier consumption by LLMs.

- This allows them to retrieve and process online content with greater speed and accuracy.

Strategic Takeaway for Enterprise Leaders

MCP is not just a technical protocol; it is a strategic enabler for operational AI. It provides the missing link between GenAI intelligence and enterprise execution. Organizations that adopt MCP thoughtfully can move faster, scale safer, and integrate AI more deeply into their core business processes.

From Quandary Consulting Group’s perspective, an MCP should be evaluated as part of a broader automation and integration roadmap. When combined with strong process design, modern integration platforms, and responsible AI governance, MCP becomes a catalyst for transforming how enterprises work, decide, and innovate.

- BY: Kevin Shuler

- Email: kevin@quandarycg.com

- Date: 01/13/2026

Resources

© 2026 Quandary Consulting Group. All Rights Reserved.

Privacy Policy