Knowledge Base

AI Overview

Artificial Intelligence (AI) is a branch of computer science focused on building systems that can perform tasks that typically require human intelligence. These tasks include learning from experience, recognizing patterns, understanding language, solving problems, and making decisions. AI systems are designed to analyze data, identify relationships within that data, and use those insights to produce predictions, recommendations, or actions.

AI is generally categorized into two main types: Narrow AI and General AI. Narrow AI, also known as weak AI, is designed to perform specific tasks within a limited domain. Examples include virtual assistants, recommendation engines, fraud detection systems, and language translation tools. General AI, sometimes referred to as strong AI, describes a theoretical system capable of performing any intellectual task that a human can perform. General AI does not currently exist.

Most modern AI applications are powered by Machine Learning (ML), a subset of AI that enables systems to learn from data rather than relying solely on explicitly programmed rules. Within Machine Learning, Deep Learning uses multi-layered neural networks to process complex data such as images, speech, and natural language.

Other important subfields include Natural Language Processing (NLP), which focuses on enabling machines to understand and generate human language, and Computer Vision, which allows systems to interpret and analyze visual information.

AI technologies are widely used across industries. Common applications include customer service chatbots, predictive analytics in finance, medical imaging analysis in healthcare, supply chain optimization, cybersecurity monitoring, and generative tools that create text, images, audio, or code. These systems help organizations improve operational efficiency, reduce manual effort, enhance decision-making, and deliver more personalized experiences.

As AI adoption continues to expand, organizations must also consider ethical, legal, and governance implications. Responsible AI practices include ensuring data privacy, minimizing bias, maintaining transparency, and implementing oversight mechanisms. Effective AI implementation requires not only technical capability but also strategic alignment with business objectives and regulatory standards.

This overview provides a foundational understanding of Artificial Intelligence, where AI came from, when it started, how AI works, AI core components, and its practical applications across various industries.

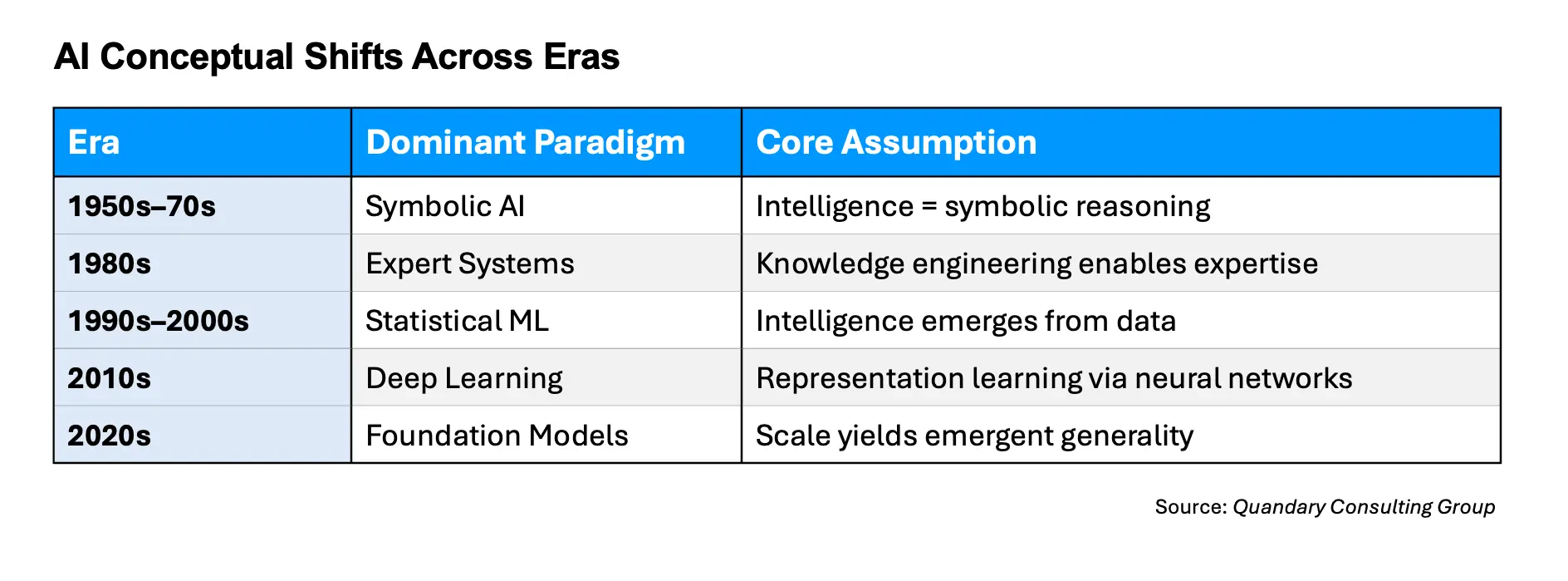

The Brief History of Artificial Intelligence

We consider artificial intelligence a young science, but since the earliest days, humans have fantasized about inanimate objects that would have human-like reasoning powers. We meet the AI concept with the ancient Greeks and Egyptians, but also in the Middle Ages - Which is where the concrete idea of artificial intelligence was born.

Scientists took the first steps towards its realization at the end of the 19th and the beginning of the 20th century, when Charles Babbage and Augusta Ada Byron, Countess of Lovelace, created the first modern computer – the basis of a machine that could be programmed and serve as AI.

- 1940s–1956, Intellectual Foundations. The conceptual roots of AI lie in formal logic, computation theory, and early neuroscience.

- Alan Turing (1936) introduced the formal model of computation now known as the Turing machine, establishing the theoretical limits of computability.

- Church–Turing thesis formalized the idea that any effectively calculable function can be computed by a machine. Early cybernetics (Norbert Wiener) explored feedback systems and control theory.

- McCulloch & Pitts (1943) proposed a mathematical model of artificial neurons.

- Hebb (1949) introduced the idea of synaptic learning (“cells that fire together wire together”). These works established the two enduring paradigms of AI:

- Symbolic Reasoning (1949): Symbolic reasoning (or Symbolic AI) is a classical artificial intelligence paradigm that uses explicit, human-readable symbols, rules, and logic to represent knowledge and solve problems. Unlike machine learning, which infers patterns from data, symbolic systems rely on pre-defined, top-down rules (e.g., AND, OR, NOT) to manipulate knowledge structures for tasks like planning, theorem proving, and expert systems.

- Connectionist Learning (1949): 'Connectivism' is a 21st-century learning theory developed by George Siemens and Stephen Downes that views knowledge as a network of connections formed through technology and social interactions. It posits that learning resides in non-human nodes (databases, communities) and emphasizes the ability to navigate, filter, and synthesize information rather than just storing it.

- 1940s The idea continued to develop, and each of the followers brought their contribution.

- Thus, in 1940, Princeton mathematician John Von Neumann designed the structure of a computer that could store data and programs in its memory, while Warren McCulloch and Walter Pitts began a deeper investigation of 'neural networks'.

- The 1950s. In 1950 Alan Turing’s “Computing Machinery and Intelligence” work was published and the 'Turning Test' was created.

- Turing Test (1950) Turing, an English mathematician, logician, and cryptographer, was the first to ask whether machines could think like humans. which was supposed to determine the answer to this question. The Turing Test tested whether a computer could offer examiners solutions that would deceive them into thinking it was a human being. This test remains an essential part of the history and origins of AI to this day.

- 1950s and 1960s This was a significant year for the development of AI and governments around the world started recognized the potential of AI. This period is characterized by strong optimism and foundational system-building. Some key movements during period for AI were:

- Dartmouth College (1956) hosted a summer conference attended by the most eminent names in the field of AI, including Marvin Minsky, Oliver Selfridge, and John McCarthy, who coined the term artificial intelligence.

- Logic Theorist (1956/1957), which was the first AI program, calledwas presented at the conference, which proved mathematical theorems. The proposal stated that “every aspect of learning or intelligence can in principle be so precisely described that a machine can be made to simulate it.”

- General Problem Solver (GPS) (1957) was a pioneering 1957 AI program developed by Allen Newell and Herbert A. Simon that simulates human problem-solving using "means-ends analysis". It works by breaking goals into smaller subgoals, comparing the current state to the target state, and applying operators to minimize differences. It was designed for domain-independent tasks like logic puzzles and math proofs.

- Lisp (1960s) (Lisp, short for "List Processor,") was the premier language for AI research from the 1960s onward, designed by John McCarthy for symbolic computation. Lisp excels at manipulating symbolic data, recursion, and rapid prototyping, making it ideal for expert systems, theorem provers, and AI planning. Key features include code-as-data syntax, dynamic typing, and powerful macros.McCarthy developed Lisp, an AI programming language still in use today.

- 3 Key Historical Applications of Lisp AI

- Expert Systems: Programs like the American Express Authorizer's Assistant for fraud detection.

- Natural Language Processing: Early systems like SHRDLU and ELIZA.

- Planning and Logistics: DART (Dynamic Analysis and Replanning Tool), which proved pivotal in the first Gulf War

- 3 Key Historical Applications of Lisp AI

- Shakey the Robot (1960s) integrated perception, planning, and action, but was domain-limited.

- ELIZA (1966) created by MIT professor Joseph Weizenbaum, was an early natural language processing program that demonstrated pattern-based conversation.

- Perceptrons (1969) by Marvin Minsky and Seymour A. Papert, is the first systematic study of parallelism in computation—marked a historic turn in artificial intelligence and returned to the idea that intelligence might emerge from the activity of networks of neuron-like entities.

- To read, a copy 'Perceptrons' (for free), visit: An Introduction to Computational Geometry (MIT Press Direct)

- The 1970s and 1980s saw a lull in AI research and development as governments and industries cut off funding. In 1980s, research into deep learning techniques and the adoption of Edward Feigenbaum’s Expert Systems and gave rise to hope that AI research could continue. Still, funding was missing until the mid-1990s. Some key movements during period for AI were:

- Expert Systems (1970s) is an Artificial Intelligence (AI) program that simulates the decision-making ability of a human expert to solve complex, specific problems using a knowledge base and inference rules. These systems use "if-then" logic to provide, for example, medical diagnoses, technical support, or financial advice, typically complementing rather than replacing human experts. Two key Expert Systems that came from this period and are still used today were:

- MYCIN (medical diagnosis) (Early 1970s) is a pioneering artificial intelligence (AI) program and expert system developed at Stanford University by Edward Shortliffe, under the guidance of Bruce Buchanan, and Stanley Cohen. This was designed to assist physicians in the diagnosis/treatment recommendations for infectious diseases, particularly blood infections (bacteraemia) and meningitis.

- XCON (DEC configuration system) (1976) XCON (eXpert CONfigurer), originally known as R1, was a pioneering rule-based expert system developed for the Digital Equipment Corporation (DEC) by John McDermott of Carnegie Mellon University. It was designed to automatically configure VAX computer system components based on specific customer requirements, replacing a slow, error-prone, and manual process.

- Lighthill Report (1973) The Lighthill Report, written by the scholar James Lighthill, Although this paper supported AI research related to automation and to computer simulation of neurophysiological and psychological processes. This report was, also, highly critical of basic research in the foundation areas such as robotics and language processing; as a result AI funding in the UK was cut.

- To download a free PDF copy of Lighthill's report, visit: Lighthill Report: Artificial Intelligence: a paper symposium and to read the full transcript of a debate about Lighthill's report, visit: The Lighthill Debate on AI from 1973: An Introduction and Transcript (GitHub)

- Physical Symbol System Hypothesis (PSSH) (1976), was proposed by Allen Newell and Herbert Simon; they proposed that physical symbol system possesses the necessary and sufficient means for general intelligent action. This also suggested that human thinking and machine AI are both forms of symbol manipulation, where physical patterns are combined and processed.

- 'Probabilistic Reasoning in Intelligent Systems' (1988), by Judea Pearl. This book transformed AI by proving that uncertainty can be represented, computed, and reasoned about systematically using probabilistic graphical models, most commonly known as Bayesian Networks (also known as Belief Networks or Bayesian Belief Networks) are probabilistic graphical models that represent variables and their conditional dependencies via a Directed Acyclic Graph (DAG). These networks are widely used for predictive analytics, diagnostics, and modeling complex, uncertain systems.

- To read, a copy 'Probabilistic Reasoning in Intelligent Systems' (for free), visit: Judea Pearl - Probabilistic Reasoning in Intelligent Systems (The Swiss Bay)

- Expert Systems (1970s) is an Artificial Intelligence (AI) program that simulates the decision-making ability of a human expert to solve complex, specific problems using a knowledge base and inference rules. These systems use "if-then" logic to provide, for example, medical diagnoses, technical support, or financial advice, typically complementing rather than replacing human experts. Two key Expert Systems that came from this period and are still used today were:

- 1990s, we have had the opportunity to witness the rapid development of computer science and AI that continues today. Some key historical AI events that took place between 1990-2000s were:

- 'An Overview of Statistical Learning Theory' (1999) is a mathematical framework used for understanding how and when machine learning models generalize well from data. Developed by Vladimir Vapnik (co-creator of Support Vector Machines), it provides the theoretical foundation for modern supervised learning.

- To read, a copy 'An Overview of Statistical Learning Theory' (for free), visit: An Overview of Statistical Learning Theory (MIT)

- 1997, IBM Deep Blue was a supercomputer developed by IBM specifically to play chess. It became famous in 1997 for defeating the reigning world chess champion, Garry Kasparov, in a six-game match — the first time a computer beat a world champion in a match under standard tournament conditions.

- 2000s and 2010s advances in machine learning (ML), data availability, and faster processors allowed AI systems to scale.

- The Neural Network Renaissance refers to the period — roughly starting in the mid-2000s and accelerating in the 2010s — when neural networks (especially deep learning) made a dramatic comeback and began outperforming other AI approaches. This 'Renaissance brought on several historical breakthrough improvements in AI:

- 2011, IBM's Watson came to market and became the successor to Deep Blue. IBM Watson is IBM’s suite of AI and data services. It combines machine learning, natural language processing (NLP), automation, and analytics tools designed for enterprise use. Watson started as a question-answering system (famously winning Jeopardy! in 2011). Today, it’s a collection of AI products under the broader IBM AI and data platform, including:

- Watsonx.ai – AI model development and deployment

- Watsonx.data – Data lakehouse for AI workloads

- Watsonx.governance – AI governance and risk management

- Industry-specific solutions (healthcare, finance, customer service, etc.)

- 2012 ImageNet (AlexNet): dramatic improvement in image classification.

- 2016, AlphaGo, Combined deep neural networks with reinforcement learning and Monte Carlo tree search and defeated world champion Lee Sedol. This proved deep learning shifted AI research from symbolic abstraction toward representation learning.

- 2017, The “Attention is All You Need”, paper by Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser and Illia Polosukhin. This introduced self-attention architectures as parallelizable, scalable and superior for sequence modeling.

- ACADEMIA has a made a PDF version of the research paper, "Attention is All You Need" free to download to the public; to download a copy, visit: Academia: "Attention is All You Need"

- '2018, Reinforcement Learning' by Richard Sutton and Andrew Barto is a foundational textbook (often called the "bible of RL") that provides a comprehensive introduction to, and framework for, computational reinforcement learning. It details how agents learn to make optimal decisions by interacting with environments to maximize cumulative rewards.

- To read, a copy 'Reinforcement Learning: An Introduction (second edition)' (for free), visit: Reinforcement Learning: An Introduction (Carnegie Mellon University)

- 2011, IBM's Watson came to market and became the successor to Deep Blue. IBM Watson is IBM’s suite of AI and data services. It combines machine learning, natural language processing (NLP), automation, and analytics tools designed for enterprise use. Watson started as a question-answering system (famously winning Jeopardy! in 2011). Today, it’s a collection of AI products under the broader IBM AI and data platform, including:

- The Neural Network Renaissance refers to the period — roughly starting in the mid-2000s and accelerating in the 2010s — when neural networks (especially deep learning) made a dramatic comeback and began outperforming other AI approaches. This 'Renaissance brought on several historical breakthrough improvements in AI:

- In the 2020s, generative models became central. GPT-4, Stable Diffusion, and multimodal systems can now produce text, images, and music. AI moved from research environments into widespread industrial and consumer use.

- November 2022, brought the public launch of ChatGPT. OpenAI released ChatGPT to the public as a free research preview.

Within 5 days, it reached 1 million users — one of the fastest-growing consumer applications in history. This marked the first time millions of people could interact with advanced AI through natural conversation. - 2023, generative AI rapidly moved into everyday life:

- Businesses integrated ChatGPT into workflows

- Microsoft embedded GPT into Office (Copilot) and Bing

- Google accelerated AI features across its products

- Developers built thousands of AI-powered apps

- AI became central in education, marketing, coding, and research

What are the Main Types of Artificial Intelligence (AI)?

Artificial Intelligence (AI) can be categorized into distinct types based on capability and functionality. The most widely accepted classification divides AI into Narrow AI, General AI, and Superintelligent AI, based on the level of intelligence demonstrated by the system.

Narrow AI (Artificial Narrow Intelligence)

- Narrow AI, also referred to as Weak AI, is designed to perform a specific task or a limited range of tasks. These systems operate within predefined boundaries and cannot perform outside their programmed domain. Narrow AI does not possess consciousness, self-awareness, or genuine understanding; instead, it uses data-driven models and algorithms to simulate intelligent behavior.

- Examples of Narrow AI include virtual assistants, recommendation engines, fraud detection systems, language translation tools, and autonomous vehicle navigation systems. Nearly all AI systems currently in use today fall into this category.

General AI (Artificial General Intelligence)

- Artificial General Intelligence (AGI), also known as Strong AI, refers to a theoretical form of AI that would possess the ability to understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human intelligence.

- Unlike Narrow AI, General AI would not be limited to a single function or domain. It would be capable of reasoning, problem-solving, abstract thinking, and adapting to new and unfamiliar situations without requiring task-specific programming.

- At present, General AI does not exist. Research in this area continues, but current AI technologies have not achieved human-level cognitive flexibility.

Superintelligent AI (Artificial Superintelligence)

- Artificial Superintelligence (ASI) describes a hypothetical AI system that would surpass human intelligence across all domains, including creativity, decision-making, emotional intelligence, and scientific reasoning.

- Such a system would be capable of independently improving itself and potentially operating beyond human control or comprehension.

- Superintelligent AI remains speculative and is primarily discussed in academic, philosophical, and ethical contexts. It does not currently exist and presents significant theoretical considerations related to governance, safety, and societal impact.

In summary, the main types of AI are categorized by their level of cognitive capability. Narrow AI is currently deployed in real-world applications, while General AI and Superintelligent AI remain theoretical concepts under research and debate.

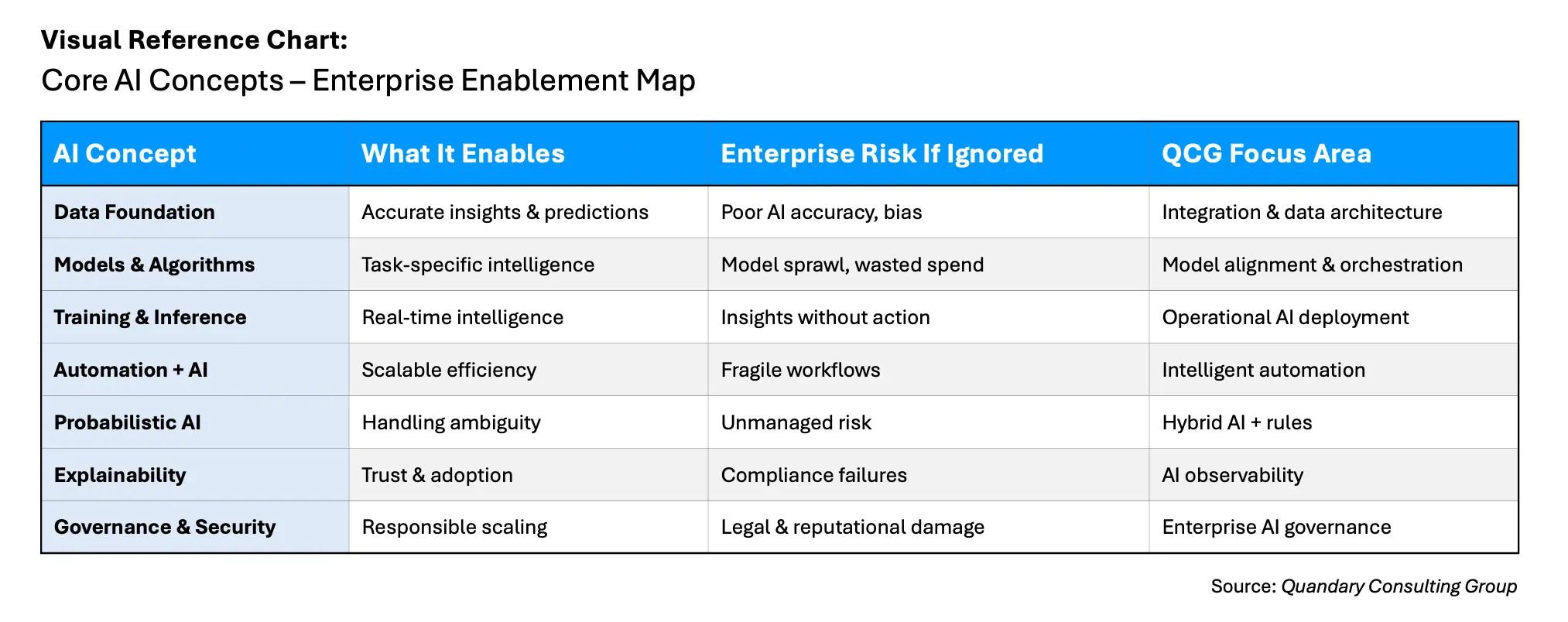

What are the Core AI Concepts?

Artificial Intelligence (AI) is built upon several foundational concepts that enable systems to simulate intelligent behavior. Understanding these core concepts provides clarity on how AI systems are designed, trained, and deployed across various applications.

Machine Learning (ML)

- Machine Learning (ML) is a subset of Artificial Intelligence that enables systems to learn from data rather than relying solely on explicit programming.

- In traditional software development, rules are manually coded to produce specific outputs. In contrast, machine learning systems identify patterns within large datasets and use those patterns to make predictions or decisions.

- Machine learning models improve over time as they are exposed to additional data.

- Common approaches within machine learning include supervised learning, unsupervised learning, and reinforcement learning.

- Supervised learning relies on labeled data to train models, while unsupervised learning identifies hidden patterns in unlabeled data. Reinforcement learning trains models through trial and error by rewarding desired outcomes.

Deep Learning

- Deep Learning is a specialized subset of machine learning that uses artificial neural networks with multiple layers to process complex data.

- These neural networks are inspired by the structure of the human brain and are particularly effective for tasks involving large volumes of unstructured data, such as images, audio, and text.

- Deep learning models power many modern AI applications, including speech recognition systems, image classification tools, and generative AI platforms.

- The performance of deep learning systems typically improves as the volume of training data increases.

- Deep Learning is a specialized subset of machine learning that uses artificial neural networks with multiple layers to process complex data.

Natural Language Processing (NPL)

- Natural Language Processing (NPL) is the field of AI focused on enabling machines to understand, interpret, and generate human language.

- NLP combines computational linguistics with machine learning techniques to process written and spoken communication.

- Applications of NLP include chatbots, sentiment analysis, language translation, document summarization, and voice assistants.

- NLP systems analyze language structure, meaning, and context to deliver accurate and relevant responses.

Computer Vision

- Computer Vision is a branch of AI that enables systems to interpret and analyze visual information from images and videos.

- Computer vision models are trained to recognize objects, detect patterns, and extract meaningful insights from visual data.

- Common applications include facial recognition, medical imaging analysis, quality inspection in manufacturing, and autonomous vehicle navigation.

- Computer vision often relies on deep learning techniques to achieve high levels of accuracy.

Generative AI

- Generative AI refers to systems that create new content based on patterns learned from training data.

- These systems can generate text, images, audio, video, or code.

- Generative models analyze existing data and produce outputs that resemble the original training material while introducing novel variations.

- Examples of generative AI include text generation models, image creation tools, and code assistants.

- These systems are increasingly used in content creation, software development, design, and business automation.

- Generative AI refers to systems that create new content based on patterns learned from training data.

Together, these core AI concepts form the foundation of modern artificial intelligence systems. Machine learning provides the ability to learn from data, deep learning enhances performance on complex tasks, natural language processing enables language understanding, computer vision interprets visual inputs, and generative AI produces new content. Understanding these concepts is essential for evaluating AI capabilities and applications across industries.

The Importance of AI

Artificial Intelligence (AI) matters because it enables organizations and individuals to process information, automate tasks, and make decisions at a scale and speed that would not be possible through human effort alone. By leveraging data-driven models and advanced algorithms, AI systems can identify patterns, generate insights, and support outcomes that improve efficiency, accuracy, and innovation.

One of the primary reasons AI is important is its ability to enhance operational efficiency. AI-powered automation reduces the need for repetitive manual work, allowing employees to focus on higher-value activities such as strategic planning, creative problem-solving, and customer engagement. This shift not only increases productivity but also improves overall organizational performance.

AI also plays a critical role in improving decision-making. Advanced analytics and predictive modeling enable organizations to analyze large volumes of structured and unstructured data in real time. As a result, leaders can make more informed decisions based on evidence rather than intuition alone. In industries such as healthcare, finance, and manufacturing, data-driven insights can lead to better risk management, improved safety, and optimized resource allocation.

Another significant impact of AI is its ability to personalize experiences. AI systems can analyze user behavior, preferences, and historical data to deliver tailored recommendations, targeted marketing, and customized services. This capability enhances customer satisfaction and strengthens long-term engagement.

AI also drives innovation by enabling new products, services, and business models. Technologies such as generative AI, autonomous systems, and intelligent assistants are transforming industries and creating competitive advantages for early adopters. Organizations that effectively integrate AI into their operations are better positioned to adapt to changing market conditions and emerging opportunities.

Finally, AI matters because of its broader societal implications. When implemented responsibly, AI has the potential to address complex global challenges, including disease detection, climate modeling, supply chain optimization, and disaster response. However, the growing influence of AI also requires careful attention to governance, ethics, transparency, and accountability to ensure that its benefits are realized equitably and safely.

In summary, Artificial Intelligence matters because it enhances efficiency, strengthens decision-making, enables personalization, drives innovation, and contributes to solving large-scale challenges. Its strategic importance continues to grow as organizations increasingly rely on data and digital technologies to remain competitive in a rapidly evolving landscape.

Key AI Concepts Mapped to Real Enterprise AI Use Cases

- Data Foundation

- Integrating ERP, CRM, and ticketing data to enable AI-driven service routing

- Creating a unified data layer for GenAI copilots

- Feeding predictive maintenance models from IoT + asset systems

- Models & Algorithms

- LLMs for contract analysis and knowledge retrieval

- Predictive models for demand forecasting

- Vision models for quality inspection in manufacturing

- Training & Inference

- Real-time fraud detection during transactions

- AI-driven recommendations embedded in sales workflows

- Automated document classification at ingestion

- Automation + Decision Support

- AI-assisted exception handling in finance operations

- Intelligent case triage in customer support

- Automated compliance checks with human approval loops

- Probabilistic Intelligence

- Confidence-scored AI recommendations for underwriting

- Risk-based decisioning in supply chain planning

- AI-assisted eligibility determination

- Explainability & Trust

- Regulated decision audits in financial services

- Explainable credit scoring outputs

- Transparent AI reasoning for executive dashboards

- Governance & Security

- AI usage policies across departments

- Secure GenAI access to internal systems

- Model monitoring and drift detection

In Conclusion:

For enterprise leaders, the real challenge with AI is not understanding what the technology can do—it’s determining how to operationalize it responsibly, repeatably, and at scale.

AI delivers sustained value only when it is embedded directly into business processes, decision flows, and system architectures. This requires a shift away from point solutions and toward AI-enabled operating models, where intelligence is delivered at the moment of action—inside workflows, APIs, and platforms employees already use.

Equally important, AI must coexist with existing enterprise systems. Most organizations are operating in hybrid environments that include legacy platforms, modern SaaS applications, and custom-built solutions. AI also introduces a new risk profile that leaders cannot ignore. Unlike traditional software, AI systems are probabilistic, continuously evolving, and often opaque without intentional design.

From Quandary's Point-of-View:

QCG views AI as a force multiplier for automation. Rule-based automation provides consistency and control; AI introduces adaptability and intelligence. When combined, organizations can move beyond basic efficiency gains toward resilient, intelligent operations that can handle exceptions, ambiguity, and scale without constant human intervention.

Ultimately, the enterprises that win with AI are those that treat it as a long-term capability, not a one-time deployment. Success requires aligning technology choices with business strategy, investing in integration and governance, and designing AI systems that evolve alongside the organization.

- By: Kevin Shuler

- Email: kevin@quandarycg.com

- Date: 10/15/2024

Resources

© 2026 Quandary Consulting Group. All Rights Reserved.

Privacy Policy